Preamble

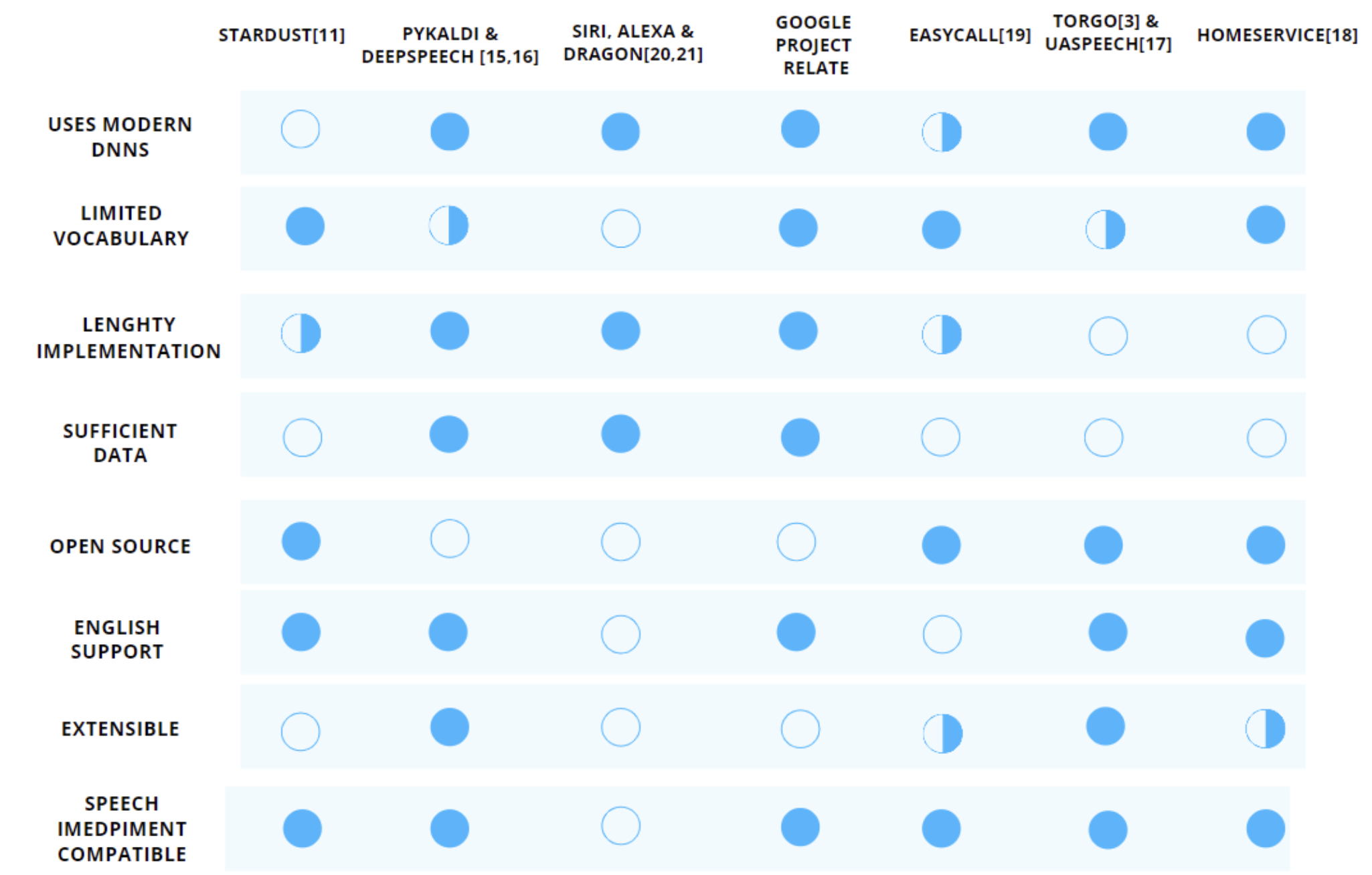

In my "ASR for Dysarthria" project I outlined an interface solution to the problems that quadriplegics face.

Please navigate to that project from the drop-down as it was the preliminary work for this one.

This solution was limited to current state-of-art which is ineffective.